Wubba Lubba Dub Dub? More like HTTP 200 OK.

Over the last few weeks, our servers were absolutely hammered by 450 million API requests from engineers trying to smuggle 1,000 neurotic teenagers to safety through increasingly dangerous portals. It was messy, math-heavy, and arguably the most chaotic coding challenge we've ever run.

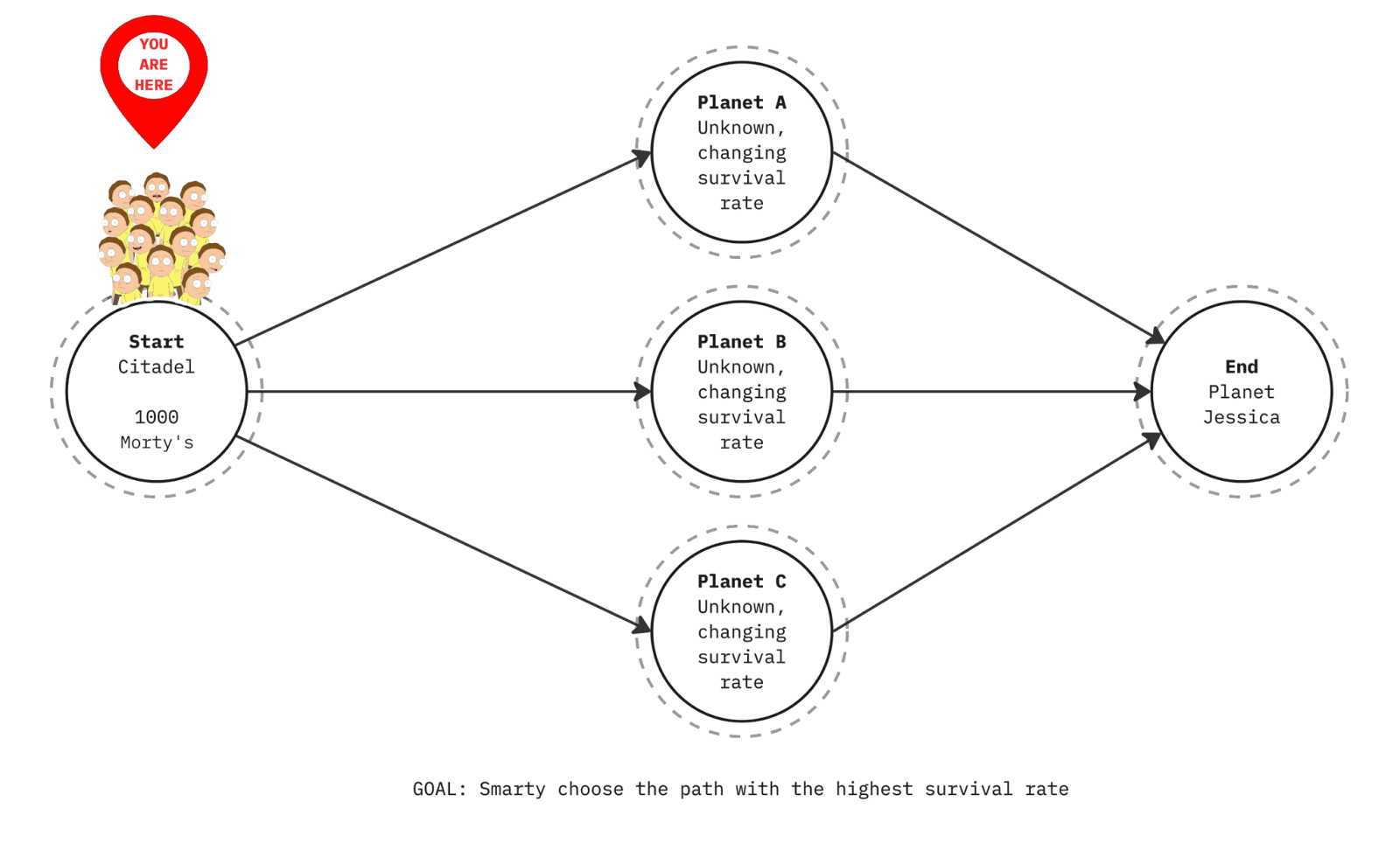

The setup was simple enough to explain at a bar: You've got 1000 Morties stuck in the Citadel who need to reach Planet Jessica. Your knock‑off portal gun can't make the jump directly – you have to route through one of three intermediate planets, each with different and changing survival rates. Send groups of up to 3 Morties at a time, try to get as many to safety as possible. Classic multi‑armed bandit problem, right?

The "Unpromptable" Problem

We designed the "Morty Express" challenge to be deceptively simple. We wanted to see if we could create a problem that was "unpromptable" – something that required genuine engineering intuition, data analysis, and infrastructure skills, rather than just a cleverly worded query to an LLM.

The results backed this up. When we ran top-tier AI models like Claude Code, they plateaued around 60%. To get into the 90s, you needed more than code generation; you needed to treat the API like a black box physics experiment.

The Deceptively Simple Challenge

The interface was straightforward: a REST API with four endpoints. Request a token, start an episode, send Morties through portals to one of three planets, and check your status. Each trip had a binary outcome: your Morties either survived the journey or they didn't. The survival probability for each planet changed dynamically based on the number of trips taken.

What we didn't tell anyone: the entire game engine was only 25 lines of code, governed by a single random seed (an integer from 0 to 1000) that controlled everything.

What the Math Looked Like

Behind the curtain, all three planets obeyed sinusoidal survival probability functions. The patterns weren't obvious from limited sampling, and one planet in particular lured players in with a deceptive stability that collapsed under scrutiny. This ambiguity turned the challenge into a global sandbox for 500+ developers across 34 countries, who collectively initiated over 14 million games in a stunning showcase of algorithmic diversity.

To hunt down the optimal strategy, contestants threw the mathematical kitchen sink at our servers: Bayesian phase tracking with discretized candidates, Fourier transforms to isolate portal frequencies, dynamic programming to maximize variance rather than expected value, and even gradient boosted models trained on GPUs. One standout contestant bypassed code-heavy analysis entirely, converting return data into a colored HTML grid and visually matching patterns by simply resizing their browser window. But the most aggressive strategy was pure efficiency – "hot spotting," or killing runs within the first 10 steps to fish for a better random seed, proving that sometimes the best way to solve a math problem is to just restart the universe until the odds are in your favor.

The Hidden Trick

Here's where it got interesting, and where some contestants outsmarted us: because the same seed controlled all game parameters, the probability space for phase offsets between planets was limited. A few sharp contestants figured this out and realized they could make faster assumptions about phase relationships. If you understood one planet's current state, you had information about the others. We hadn't anticipated anyone would reverse-engineer this relationship from the API responses, but the top performers did exactly that.

When Scale Became Strategy

The top performers didn't just write better algorithms, they deployed them at scale. Every winner ran their solutions on remote infrastructure rather than local machines. AWS workers grinding through millions of game attempts simultaneously.

The Competitive Bloodsport

The leaderboard was a bloodbath. For a while, there was a four-way tie for first place at exactly 93.3%, leaving us sweating how to split the prize pool. These players had effectively solved the math.

Then a postdoc from Harvard casually posted 93.7% and walked away with it. She figured out something the others missed (probably the full sinusoidal behavior across all three planets) and squeezed out those extra percentage points that separated good from great.

In the AI world, Claude Code outperformed GPT-5, achieving 60.2%. Respectable for an AI, but nowhere near competitive. The challenge succeeded in what we set out to do: create something that required human engineering judgment on top of code generation capabilities.

This challenge proved that while AI can write code, it can't yet replace the engineer's ability to observe a system, hypothesize about its hidden constraints, and deploy a scalable architecture to exploit them.

In the end, 937 Morties made it to Planet Jessica. As for the other 63? Well, that's the cost of doing business with knock-off portal guns.